Several months have passed since my previous “mutex wait” post. I was so busy with work and conference presentations. Thanks to all my listeners at UKOUG2011, Hotsos2012 and Medias2012 conferences and several seminars for inspiring questions and conversations.

I. Unexpected change.

Now it is time to discuss how contemporary Oracle waits for mutexes. My previous posts described evolution of “invisible and aggressive” 10.2-11.1 mutex waits into fully accounted and less aggressive 11gR2 mutexes. Surprisingly Oracle 11.2.0.2.2 (or 11.2.0.2 PSU2) appeared in April 2011 demonstrated almost negligible CPU consumption during mutex waits. Remember that in my testcase one session statically waits for “Cursor Pin” mutex during 49s:

sqlplus /nolog @cursor_pin_s_waits.sql ...

Top 5 Timed Foreground Events

| Event | Waits | Time(s) | Avg wait (ms) | % DB time | Wait Class |

|---|---|---|---|---|---|

| cursor: pin S | 1 | 49 | 49265 | 92.56 | Concurrency |

| DB CPU | 4 | 8.10 | |||

| log file sync | 3 | 0 | 7 | 0.04 | Commit |

| control file sequential read | 119 | 0 | 0 | 0.01 | System I/O |

| db file sequential read | 107 | 0 | 0 | 0.01 | User I/O |

In 11.2.0.2.2 we observe thousands sleeps in “Mutex Sleep Summary” section of AWR report instead of millions in previous versions. This suggests 10ms mutex sleep duration:

| Mutex Type | Location | Sleeps | Wait Time (ms) |

|---|---|---|---|

| Cursor Pin | kkslce [KKSCHLPIN2] | 4,898 | 49,265 |

It occurred that Oracle 11.2.0.2.2 introduced completely new concept of mutex wait schemes. MOS described this concept in note Patch 10411618 Enhancement to add different “Mutex” wait schemes. The enhancement allows one of three concurrency wait schemes and introduces 3 parameters to control the mutex waits:

- _mutex_wait_scheme – Which wait scheme to use:

- 0 – Always YIELD.

- 1 – Always SLEEP for _mutex_wait_time.

- 2 – Exponential Backoff with maximum sleep _mutex_wait_time.

- _mutex_spin_count – the number of times to spin. Default value is 255.

- _mutex_wait_time – sleep timeout depending on scheme. Default is 1.

The note also mentioned that this fix effectively supersedes the patch 6904068 for 11g releases.

It was completely unexpected to find such behavioral change associated with fifth digit shift of Oracle version.

Look more closely on these new schemes.

II. Default Exponential Backoff. _mutex_wait_scheme 2.

Since 11.2.0.2.2 Oracle uses the scheme 2 by default. This scheme is named “Exponential backoff” in MOS documents. It results in very small CPU consumption during wait. Surprisingly, DTrace shows that there is no exponential behavior with default parameter values. Session repeatedly sleeps with 1 cs duration:

SQL> select 1 from dual where 1=2; kgxSharedExamine(0xDD4E2C0,mutex=0x67731388,aol=0x67551E88) yield() call repeated 2 times semsys() timeout=10 ms call repeated 4237 times

To reveal the exponential backoff we need to increase the _mutex_wait_time parameter:

SQL> alter system set "_mutex_wait_time"=30; SQL> select 1 from dual where 1=2; kgxSharedExamine(…) yield() call repeated 2 times semsys() timeout=10 ms call repeated 2 times semsys() timeout=30 ms call repeated 2 times semsys() timeout=80 ms semsys() timeout=70 ms semsys() timeout=160 ms semsys() timeout=150 ms semsys() timeout=300 ms call repeated 159 times

The _mutex_wait_time value defines the maximum amount of time to sleep for exponential backoff in centiseconds.

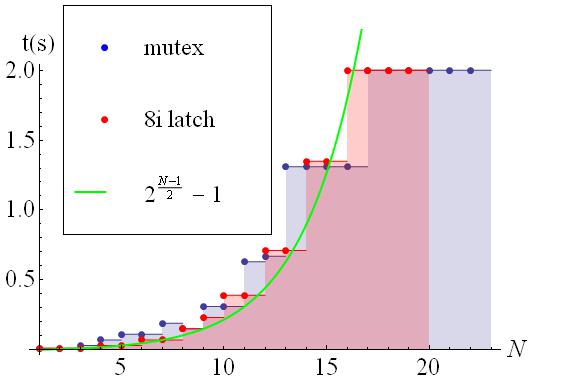

When I set _mutex_wait_time=0 the maximum timeout became equal to 2 seconds. This closely resembles the Oracle 8i latch acquisition algorithm. However, unlike old latches, the mutex exponential backoff timeouts are randomized and slightly vary between platforms. The following graph compares typical timeouts and their exponential fit:

Due to exponentiality the _mutex_wait_scheme=2 is insensitive to _mutex_wait_time value. Indeed, only sleep after the fifth unsuccessful spin is affected by this parameter. Look at the graph comparing the throughputs (number of PL/SQL calls per second) of my “library cache: mutex X” testcase with maximum sleep times set to 1/100s and 2s:

There is less than five percent difference between the two graphs. Therefore, I doubt that _mutex_wait_time adjustment of default scheme 2 could help the performance tuning. I think that its primary goal is to workaround bugs causing abnormally long mutex holding time.

Why error bars on graphs? You may wonder why I put vertical error bars on my graphs. The reason is that contemporary Oracle instance is “alive”. Despite all my efforts to create “clean” testcase workload, the database “breathes”. The AWR housekeeping, advisors runs, SGA autotuning and statistics gathering unpredictably affected the experiments. This is why I had to run test workload several times and statistically average the results. The error bar shows the interval the result will likely belong if you repeat the experiment.

I would like to emphasize again that the default mutex wait is not exponential. Without underscore parameters contemporary Oracle behaves very close to patch 6904068. It differs only by two spin and yield() cycles at the beginning. These two cycles change the mutex wait performance drastically. I will explore this in separate post.

III. Simply SLEEPS. _mutex_wait_scheme 1.

In wait scheme 1 the session repeatedly requests 1 ms sleeps by default:

SQL> select 1 from dual where 1=2; kgxSharedExamine(…) yield() pollsys() timeout=1 ms call repeated 25637 times

The _mutex_wait_time parameter controls sleep timeout in milliseconds:

SQL> alter system set "_mutex_wait_time"=30; SQL> select 1 from dual where 1=2; kgxSharedExamine(…) yield() pollsys() timeout=30 ms call repeated 1578 times

Again, with _mutex_wait_time=10 Oracle session waits for mutex like it did with patch 6904068. The difference is one spin and yield() cycle and another syscall. It is not surprising that such combination of mutex wait parameters is recommended by SAP for Unix platforms.

Unlike the previous, _mutex_wait_scheme 1 is sensitive to _mutex_wait_time tuning. The next graph demonstrates that at moderate concurrency the small mutex sleep times performs better and results in bigger throughputs:

However, in extremely high mutex concurrency region larger mutex sleep times are preferable, resulting in less CPU consumption and better throughputs:

Therefore, the tuning of _mutex_wait_time parameter is important for performance of mutex wait scheme 1. On some platforms (Solaris, Windows) small values of _mutex_wait_time are effectively rounded by OS waits granularity. It will be a theme for another post.

Value _mutex_wait_time=0 results in mutex wait scheme 0 behaviour.

IV. Classic Yields. _mutex_wait_scheme 0.

I explored the _mutex_wait_scheme 0 in detail previously. Here is just a brief summary. This scheme:

- Mostly consist of repeating spin and yield() cycles.

- Differs from aggressive mutex waits used in previous Oracle versions by 1ms sleep after each 99 yields. This 1 ms sleep significantly reduces CPU consumption and increases robustness in high contention.

- The sleep duration and yield frequency are tunable by _wait_yield_mode _wait_yield_sleep_time_msecs and _wait_yield_sleep_freq parameters.

- One can specify different wait modes for standard and high priority processes.

The scheme 0 is very flexible. It allows almost any combination of yield and sleeps including 10g and patch 6904068 behaviors.

I have described all the wait schemes from note 10411618.8. But there is also an undocumented wait scheme.

V. Pure Spins. _mutex_wait_scheme other.

What will happen if I set the _mutex_wait_scheme to any integer not in (0,1,2)? Let me perform the experiment. Look at the AWR report for my testcase on Exadata:

SQL> alter system set "_mutex_wait_scheme"=3; SQL>@cursor_pin_s_waits.sql ...

Top 5 Timed Foreground Events

| Event | Waits | Time(s) | Avg wait (ms) | % DB time | Wait Class |

|---|---|---|---|---|---|

| DB CPU | 55 | 99.84 | |||

| cursor: pin S | 1 | 49 | 49261 | 89.05 | Concurrency |

We see that static mutex wait for 49s in scheme 3 consist of 100% CPU. The “Mutex Sleep Summary” section of AWR report shows tens of millions sleeps, much more then in any wait scheme before:

| Mutex Type | Location | Sleeps | Wait Time (ms) |

|---|---|---|---|

| Cursor Pin | kkslce [KKSCHLPIN2] | 21,838,654 | 0 |

On Solaris the DTrace shows that session repeatedly spins for the mutex with rare yield() syscalls:

SQL> select 1 from dual where 1=2;

kgxSharedExamine(0x10CFBAEC0,mutex=0x3C7FC42B0,aol=0x3C82C6A10)

kgxWait()

yield()

kgxWait() repeated 65536 times

yield()

kgxWait() repeated 65536 times

yield()

...

Each call of kgxWait() Oracle function is the spin. It consists of 255 (_mutex_spin_count) polls of mutex location. There is no OS syscalls between spins in this wait scheme. Sometimes, when 64K unsuccessful spin cycles passed, Oracle process yields the CPU. This behavior is the same for all _mutex_wait_scheme values greater then 2 that I have tried.

This is classic spinlock mutex wait scheme. It is superaggressive even comparing to 10g mutexes. The OS has not been given a chance to transfer the rest of time quantum to another process including the mutex holder. In theory it should have the maximum possible performance until you have free CPU resources.

It seems that this scheme was accidentally enabled by default in original PSU 11.2.0.2.2. Almost immediately Oracle changed the default wait scheme to 2 in Patch 12431716 Mutex waits may cause higher CPU usage in 11.2.0.2.2 PSU / GI PSU.

Summary.

Here I discussed how contemporary mutex wait schemes work in Oracle 11.2.0.2.2 to 11.2.0.3. We may choose between four basic wait schemes ranging from superaggressive to having negligible CPU consumption and a variety of parameters to tune them. The next post will discuss when and how use them.

[…] https://andreynikolaev.wordpress.com/2012/07/30/mutex-waits-part-iii-contemporary-oracle-wait-scheme… […]

Pingback by MUTEX and Library Cache PINs | clusterclouds — January 27, 2015 @ 5:15 am |